Saran Alla's Email & Phone Number

Cloud | Big Data | BlockChain | Real Time | Containers

Saran Alla Email Addresses

Saran Alla Phone Numbers

Saran Alla's Work Experience

Williams-Sonoma, Inc.

Kafka Solutions Architect

February 2019 to April 2020

Barrick Gold Corporation

Cloud Big Data Platform Architect

March 2018 to January 2019

Cloudwick

Senior Hadoop Consultant

November 2013 to June 2014

Cloudwick

Hadoop Engineer

May 2012 to October 2013

j B Group of Educational Institutions

System Administrator

January 2007 to January 2011

Show more

Show less

Saran Alla's Education

University of California, San Diego

January 2012 to January 2012

Show more

Show less

Frequently Asked Questions about Saran Alla

What is Saran Alla email address?

Email Saran Alla at [email protected] and [email protected]. This email is the most updated Saran Alla's email found in 2024.

How to contact Saran Alla?

To contact Saran Alla send an email to [email protected] or [email protected].

What company does Saran Alla work for?

Saran Alla works for Cloudera

What is Saran Alla's role at Cloudera?

Saran Alla is CDP Migration / Solutions Architect (Delivery)

What is Saran Alla's Phone Number?

Saran Alla's phone (**) *** *** 309

What industry does Saran Alla work in?

Saran Alla works in the Computer Software industry.

Saran Alla's Professional Skills Radar Chart

Based on our findings, Saran Alla is ...

What's on Saran Alla's mind?

Based on our findings, Saran Alla is ...

Saran Alla's Estimated Salary Range

Saran Alla Email Addresses

Saran Alla Phone Numbers

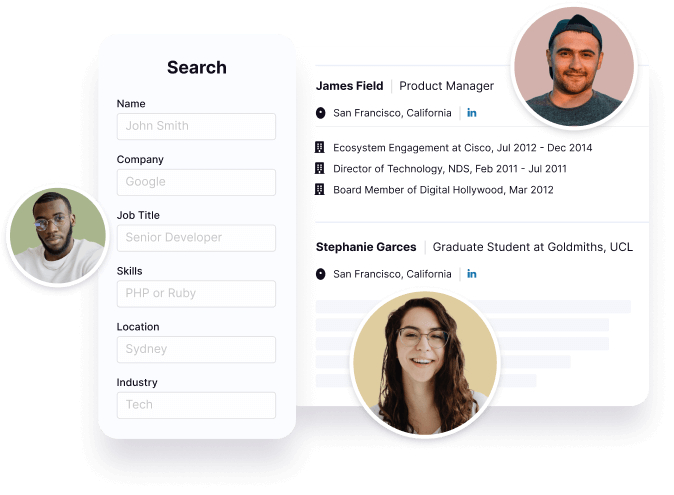

Find emails and phone numbers for 300M professionals.

Search by name, job titles, seniority, skills, location, company name, industry, company size, revenue, and other 20+ data points to reach the right people you need. Get triple-verified contact details in one-click.In a nutshell

Saran Alla's Personality Type

Introversion (I), Intuition (N), Thinking (T), Judging (J)

Average Tenure

2 year(s), 0 month(s)

Saran Alla's Willingness to Change Jobs

Unlikely

Likely

Open to opportunity?

There's 92% chance that Saran Alla is seeking for new opportunities

Top Searched People

Czech-American baseball infielder

CEO of Harbor Freight Tools

Actor ‧ Amy Ryan's husband

American rapper

Former CEO of Google

Saran Alla's Social Media Links

/in/saranreddy /company/bank-of-the-west /school/ucsandiego/