Pardeep Kumar's Email & Phone Number

Data & AI Leader - Retail & CPG

Pardeep Kumar Email Addresses

Pardeep Kumar's Work Experience

Tech Mahindra

Big Data Consultant - Lumeris

February 2014 to August 2014

Tech Mahindra

Big Data Practice Lead, COE

October 2010 to August 2014

Mahindra Satyam

Big Data Practice Lead, COE

October 2010 to August 2014

Tech Mahindra

Oracle DBA

October 2010 to January 2011

ITWS India

Software Engineer

July 2010 to October 2010

Yahoo Software Development India Private Limited

Software Engineering Intern

January 2010 to June 2010

Sr. Solutions Architect - Strategic

Show more

Show less

Pardeep Kumar's Education

Punjab Technical University

Show more

Show less

Frequently Asked Questions about Pardeep Kumar

What is Pardeep Kumar email address?

Email Pardeep Kumar at [email protected] and [email protected]. This email is the most updated Pardeep Kumar's email found in 2024.

What is Pardeep Kumar phone number?

Pardeep Kumar phone number is +91-9970609332 and +1-3142813354.

How to contact Pardeep Kumar?

To contact Pardeep Kumar send an email to [email protected] or [email protected]. If you want to call Pardeep Kumar try calling on +91-9970609332 and +1-3142813354.

What company does Pardeep Kumar work for?

Pardeep Kumar works for Databricks

What is Pardeep Kumar's role at Databricks?

Pardeep Kumar is Sr. Solutions Architect, Team Lead - Retail & CPG

What industry does Pardeep Kumar work in?

Pardeep Kumar works in the Information Technology & Services industry.

Pardeep Kumar's Professional Skills Radar Chart

Based on our findings, Pardeep Kumar is ...

What's on Pardeep Kumar's mind?

Based on our findings, Pardeep Kumar is ...

Pardeep Kumar's Estimated Salary Range

Pardeep Kumar Email Addresses

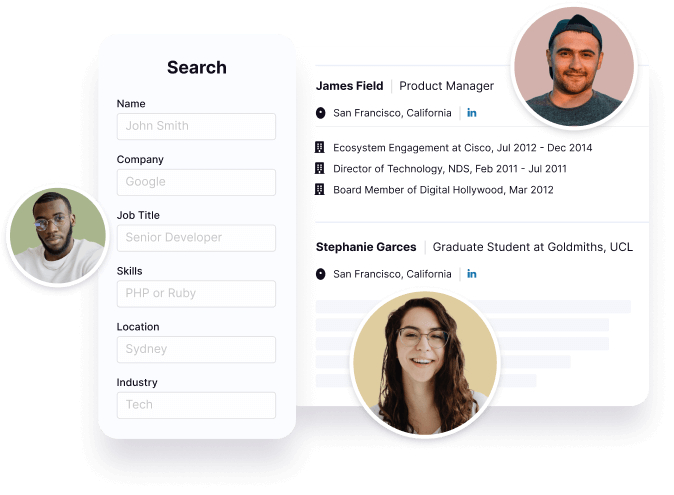

Find emails and phone numbers for 300M professionals.

Search by name, job titles, seniority, skills, location, company name, industry, company size, revenue, and other 20+ data points to reach the right people you need. Get triple-verified contact details in one-click.In a nutshell

Pardeep Kumar's Personality Type

Introversion (I), Intuition (N), Thinking (T), Judging (J)

Average Tenure

2 year(s), 0 month(s)

Pardeep Kumar's Willingness to Change Jobs

Unlikely

Likely

Open to opportunity?

There's 96% chance that Pardeep Kumar is seeking for new opportunities

Top Searched People

French film director

American film actor

Actor

American film producer and philanthropist

Actor

Pardeep Kumar's Social Media Links

/in/pardeepkumarmishra /redir/redirect /school/punjab-technical-university/ /company/databricks